Deep Netts v2.0 Has Been Released

Deep Netts 2.0.0 is out! Download it here

With 2.0 release Deep Netts has reached an important milestone after testing through real world use cases and pilot projects.

Deep Netts 2.0 provides ease of use with competitive performance and simplified integration.

We’re grateful for great community feedback, participation and especially contributions from Mani Sarkar and Krasimir Topchiyski.

Deep Netts is now free for development and we also provide opportunities for free low volume production licenses.

Main new features

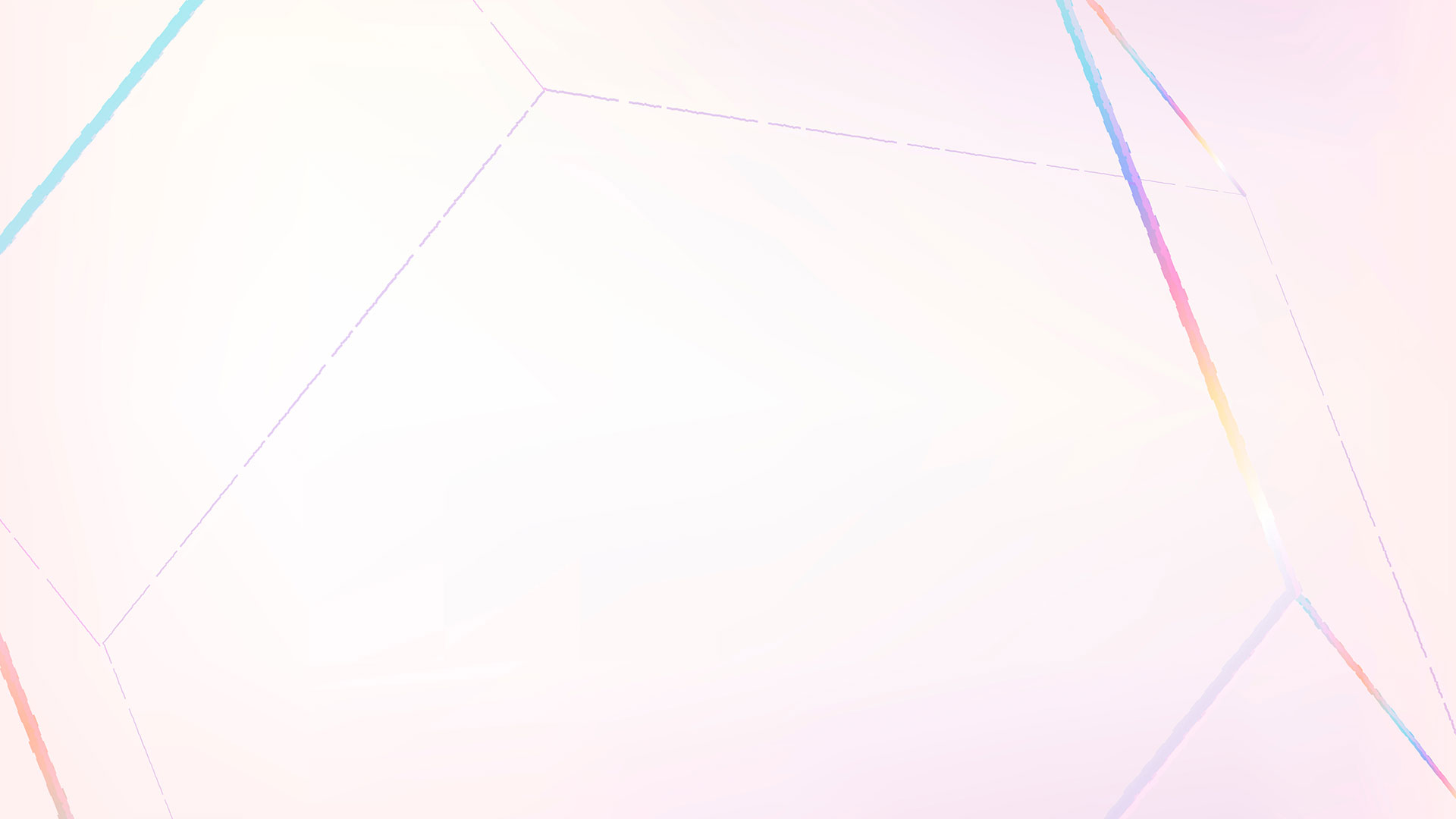

1. Visual Machine Learning Workflow

Visualization of the entire model building workflow easy to follow and edit, in order to structure and speed up experiments.

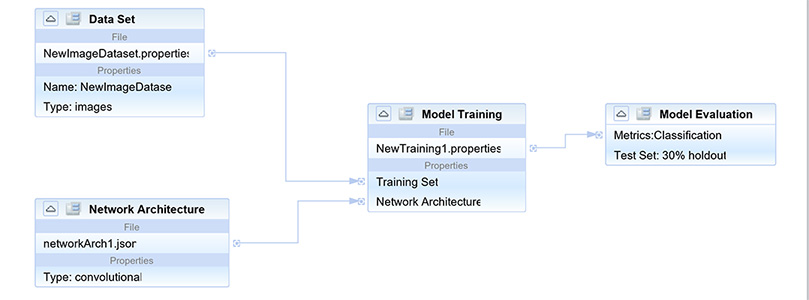

2. Auto ML Support

Automated search for best architectures and training parameters for Feed Forward Networks, both in GUI and API

See Java code example on GitHUb

3. Improved K-Fold Cross Validation,

Calculation of a final average metric for all folds, access to the best model and internal bug fix. Detailed logging of training and evaluation for all folds both in GUI and code.

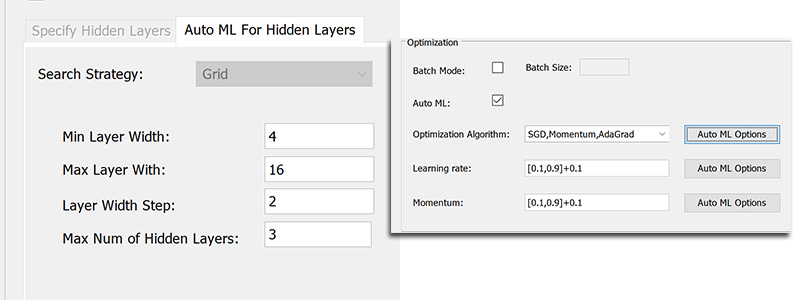

4. Easier and Better evaluation

Evaluation results provide more metrics (added RegressionMetrics) and also explanation about the metrics.

Evaluation can be performed with just one method call and the network will automatically infer the type of evaluation to perform:

EvaluationMetrics evalResult = neuralNet.test(trainTest.getTestSet());

5. Better API Documentation

API documentation includes more information that help developers understand purpose of each class/method in context of machine learning. Check it out here

6. Improved Java code examples

More examples and more explanations in comments. All examples can be used as starter projects for corresponding problems.

All examples are available at GitHub repo

7. A number of fixes in GUI and algorithm improvements

Many small fixes that improved overall stability, accuracy and performance.